Guest Post by Sydney Brogden

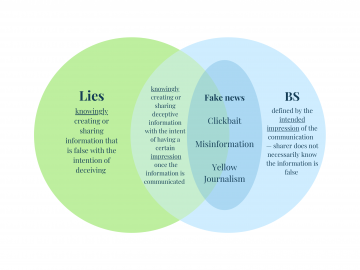

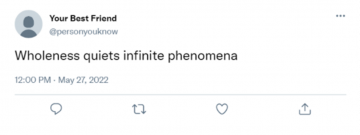

What do fake news, clickbait, misinformation, and yellow journalism all have in common? They all fit under the broader umbrella of bullshit (BS). BS is communication that demonstrates little or no concern for truth, evidence, or established…knowledge” [1]. This implies that BS-ing is more about the impression the communication creates than about the content of the communication. BS-ing can be intentional or unintentional, and may involve embellishing or exaggerating one’s knowledge, competence, or skill [1]. For example, the statement “Wholeness quiets infinite phenomena” may seem profound at first glance, but it is actually a string of words generated by a computer with no meaning. Someone might share a statement like this on Twitter to impress their followers with their profound thoughts, regardless of whether the statement means anything; the impression is deemed more important than the accuracy. The difference between lying and BS-ing is that when a person lies, they know that what they are saying is not true and intend to deceive, which may not always be the case when BS-ing [2].

Fake news is “fabricated information that mimics news media content in form, but not in organizational process or intent” [3]. Ideally, news media is intended to accurately inform users on current events. Fake news can be considered BS due to a lack of concern with truth or accuracy in the content; fake news is spread with the intention of influencing an impression rather than to strictly communicate truth.

Note: This graphic is the editor’s illustration of the terms in this article.

What do we know so far?

As issues related to BS have progressed, researchers have become interested in the psychological mechanisms that lead people to fall victim to BS, as well as what kinds of strategies and interventions might be effective in limiting its spread. Empirical research is a key component in determining how to combat BS; the insights provided by research assist in forming an understanding of what steps may be most effective in fighting it. Therefore, research findings should be taken into consideration when implementing strategies and evaluating how effective they are [3]. The research findings can contribute to the building and implementing of effective strategies to combat BS. The keys to detecting BS (and therefore the ability to disbelieve it) are self-regulatory resources and context [2]. Self-regulatory resources are the psychological resources that we use to manage and control our own thoughts, feelings, and behaviours [2]. As a person becomes tired or experiences mental load these resources become diminished, and an individual is less likely to detect BS or refrain from BS-ing [2]. For example, if a trusted friend shares some information via social media, a follower may be more likely to accept it as true based on the relationship of trust without further review. In contexts where a person has limited self regulatory resources and feels socially obligated to provide an opinion, they are more likely to BS, even if they know nothing about the subject1. Relatedly, people tend to BS relatively less when they know there will be a social expectation to explain themselves [2].

What’s happening now?

Imagine you have completed a long day of work or school and you are scrolling through Twitter in the evening for entertainment. You come across the Tweet above that was posted by a friend you know well. There are a few different ways you might interact with the content, which could be influenced by the mental resources you have available at the time (which could be limited after your long day) and what a normal Tweet by this friend looks like. For example, you might read the Tweet and scroll past if without any further consideration of what it means or why your friend posted it – you are too tired to care and do not notice anything strange about it. Similarly, you might read the Tweet and get confused by its content but assume that the meaning is too profound for you to grasp; this could be especially likely if the trusted friend normally posts similar content. However, this quote is computer generated BS that is devoid of actual meaning (as mentioned earlier). Considering the meaning of the Tweet in a more active, cognitively effortful way would reveal that it makes no sense at all. The tricky part is realizing that further consideration is needed, but nobody can be expected to detect every instance of BS they come across. One example of a promising method for combatting BS is “Birdwatch”, an intervention recently released by Twitter [4]. In “Birdwatch”, community users flag questionable content and provide context notes to encourage further engagement and consideration. There are three reasons this intervention shows promise in ways that align with the current research:

- Scalability: it is too expensive to pay individual fact checkers, and there cannot be enough fact checkers to address all the BS – but politically balanced groups of laypeople can provide similar judgements [6]

- Chain reactions: when individuals are primed to consider accuracy, they are less likely to share BS content, leading to their followers interacting with more accurate content, and so on [5]

- The approach is proactive, rather than reactive [5]

What does it mean?

As strategies and research come together, combatting BS and therefore fake news could become more effective. In the meantime, BS will still sneak through the current interventions, which means it will continue to be up to individual users to evaluate the content they interact with. This is difficult, as we all have limited self-regulatory resources and may not always detect BS, even if we try. To help with this, consider the following:

Questions to Consider

What other types of strategies do you think might be helpful in combatting BS?

How does the fast-paced nature of social media and the internet contribute to the spread of BS?

Further Resources

- Check the facts with our fact-checking tutorial

- Consider the source: is it reliable? Does the source have any motivation to produce BS? Does the source have any motivation to combat BS?

- For more useful information about Fake News and misinformation see our previous posts:

- You can also quiz yourself on your ability to spot fake news

References

[1] Petrocelli, J. V. (2018). Antecedents of bullshitting. Journal of Experimental Social Psychology, 76, 249–258. https://doi.org/10.1016/j.jesp.2018.03.004

[2] Petrocelli, J. V., Watson, H. F., & Hirt, E. R. (2020). Self-regulatory aspects of bullshitting and bullshit detection. Social Psychology, 51(4), 239–253. https://doi.org/10.1027/1864-9335/a000412; PDF available at https://www.researchgate.net/publication/341055056_Self-Regulatory_Aspects_of_Bullshitting_and_Bullshit_Detection

[3] Bago, B., Rand, D. G., & Pennycook, G. (2020). Fake news, fast and slow: Deliberation reduces belief in false (but not true) news headlines. Journal of Experimental Psychology: General, 149(8), 1608–1613. https://doi.org/10.1037/xge0000729; PDF available at https://gordonpennycook.files.wordpress.com/2020/02/bago-rand-pennycook-2020.pdf

[4] Coleman, K. (2021, January 25). Introducing Birdwatch, a community-based approach to misinformation. Twitter. Retrieved November 2, 2021, from https://blog.twitter.com/en_us/topics/product/2021/introducing-birdwatch-a-community-based-approach-to-misinformation

[5] Pennycook, G., Epstein, Z., Mosleh, M., Arechar, A. A., Eckles, D., & Rand, D. G. (2021). Shifting attention to accuracy can reduce misinformation online. Nature, 592(7855), 590–595. https://doi.org/10.1038/s41586-021-03344-2 – Pre-print version available at https://psyarxiv.com/v8ruj pre-print doi https://doi.org/10.31234/osf.io/v8ruj

[6] Allen, J., Arechar, A. A., Pennycook, G., & Rand, D. G. (2021). Scaling up fact-checking using the wisdom of crowds. Science Advances, 7(36), eabf4393. https://doi.org/10.1126/sciadv.abf4393

[7] MacKenzie, M. (2017). Fake News—Person Reading Fake News Article [Photo]. https://www.flickr.com/photos/mikemacmarketing/36588153031/

Written By: Sydney Brogden, UBC, School of Information (Guest Contributor)

Edited By: Brittany Dzioba, Alex Kuskowski, & Eden Solarik